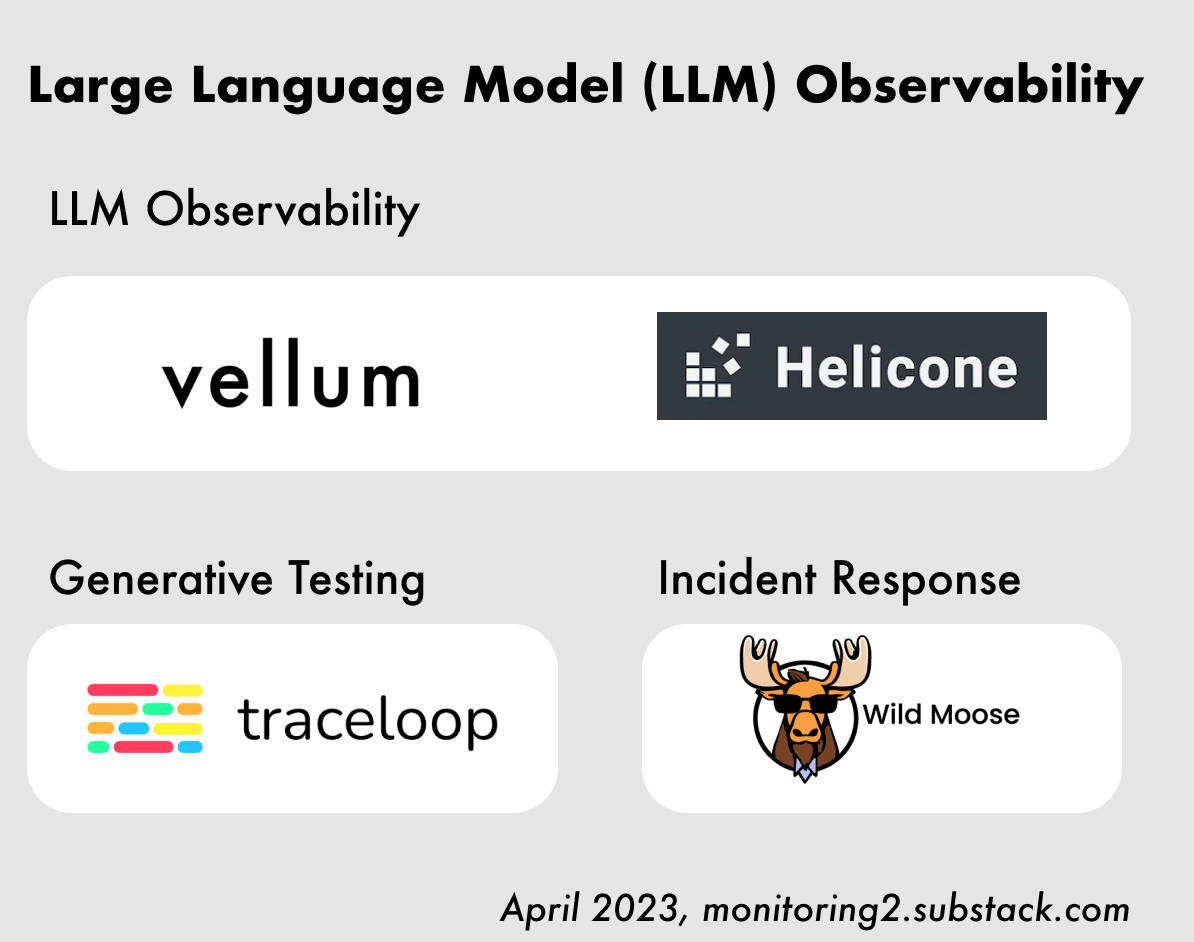

Large Language Model Observability

Startups leveraging LLMs for monitoring, testing, and incident response

Welcome back to Monitoring Monitoring. This issue highlights companies focused on solving observability challenges for teams building applications using Large Language Models (LLMs) like OpenAI’s GPT-4.

The current (April 2023) technology hype cycle around LLMs is unlike anything in recent history. It’s clear something is happening. Last Friday, around 5,000 people showed up for the San Francisco Open Source AI meetup — roughly how many people showed up in person to KubeCon North America last year. At least one person compared the AI meetup to Woodstock.

Let’s take a look at some startups focused on solving the problems of the many developers and companies rushing into the space to build new applications on top of LLMs:

LLM Observability

Developers building LLM-based apps aren’t necessarily writing a lot of code. They’re writing prompts in English sent to an API (“AI as a service”). Here’s an example of an input with OpenAI’s GPT-3:

Input prompt: Write a tweet about how a large language model takes an input and produces an output. Put the tweet in square brackets.

Output from API: [Large language models use advanced AI techniques to analyze and comprehend an input, and generate a response that reflects the learned patterns and relationships within the input sequence. #AI #LanguageModels]

This actual “low code” technique makes some things easier but other things harder. For example, how do you track the quality of prompts you are writing? How do you optimize the prompts for fast responses, or choose the best LLM model? What about cost optimization, since it can get fairly expensive?

YCombinator-backed Helicone helps teams answer those questions by intercepting their OpenAI API calls with a single line of code and summarizing their requests in a nice dashboard:

Vellum, another Winter 2023 YCombinator startup, is building more of a general-purpose developer platform for developing, monitoring, and fine-tuning LLM applications. As Vellum says in their docs, the critical piece is capturing all the inputs and outputs from a developer’s application to the LLM: “Every model input, output, and end-user feedback is captured and made visible at both the row-level and in aggregate.”

If these “LLMOps” (sorry) companies are successful in helping their customers build amazing new services using this technology, how could that change monitoring, testing, and DevOps?

Generative Testing and Incident Response

The most well-known AI model used in development and technical workflows to date is GitHub’s Copilot (“Your AI Pair Programmer”). Startups are starting to explore what it looks like when the same underlying technology can automate more than just code. Traceloop, for example, says on their website they can use the combination of distributed traces and generative AI to automatically generate tests and improve reliability.

Wild Moose ambitiously takes on the incident response space with a Slack chatbot that summarizes the entire state of your system in response to questions during critical incidents… and then summarizes what happened in a nice executive summary afterwards. As they say on their site, “Unlike humans, our AI is happy to wake up at 2 AM.”

Generative Testing and Incident Response

The 2020 newsletter linked to a well-known Powerpoint from Princeton called How to recognize AI snake oil. The presentation made a (still valid) point that the AI hype cycle, despite some legitimate technical breakthroughs in the space, more often leads to “fundamentally dubious” marketing claims about capabilities of various products or solutions.

We’re almost certainly going to see many, many more of these startups focused on solving problems in DevOps, monitoring, observability… or just figuring out how to interact with Kubernetes in plain English.

Will we be able to get a quick and relevant summary of what’s going on after an on-call page at 2am? Will an APM provider’s chatbot ask you out on a date after sharing some latency metrics?

Subscribe to the newsletter for updates to follow what startups are building or follow on Twitter.

Disclosure: Opinions strictly my own and not my employers. I am not a consultant, employed, or an investor in any of the companies mentioned. There are no paid placements, sponsorships, or advertisements in this newsletter. This post was not written by a LLM, but I did have it generate some potential titles that I rejected. It also suggested I rename the newsletter to “Observing Observability”…